Christopher Ren does a solid Elon Musk impression.

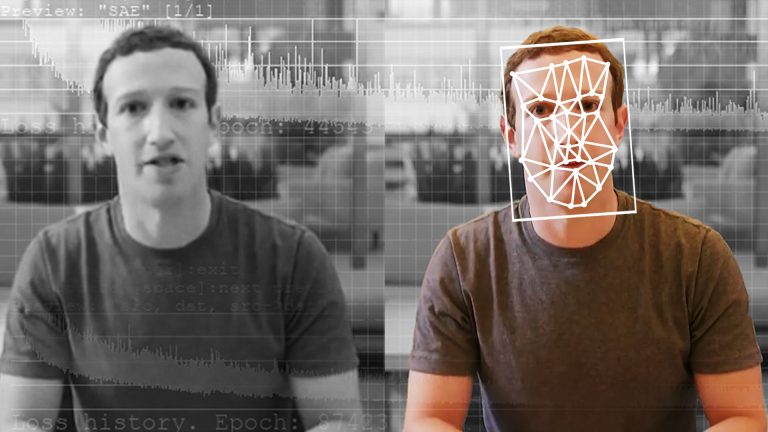

Ren is a product manager at Reality Defender, a company that makes tools to combat AI disinformation. During a video call last week, I watched him use some viral GitHub code and a single photo to generate a simplistic deepfake of Elon Musk that maps onto his own face. This digital impersonation was to demonstrate how the startup’s new AI detection tool could work. As Ren masqueraded as Musk on our video chat, still frames from the call were actively sent over to Reality Defender’s custom model for analysis, and the company’s widget on the screen alerted me to the fact that I was likely looking at an AI-generated deepfake and not the real Elon.

Sure, I never really thought we were on a video call with Musk, and the demonstration was built specifically to make Reality Defender’s early-stage tech look impressive, but the problem is entirely genuine. Real-time video deepfakes are a growing threat for governments, businesses, and individuals. Recently, the chairman of the US Senate Committee on Foreign Relations mistakenly took a video call with someone pretending to be a Ukrainian official. An international engineering company lost millions of dollars earlier in 2024 when one employee was tricked by a deepfake video call. Also, romance scams targeting everyday individuals have employed similar techniques.